The success of Generative AI-based Large Language Models (LLMs) has sparked the development of innovative techniques. These techniques have expanded use cases through intelligent decision-making and the application of LLMs to automate workflows and applications. Over time, use cases such as Natural Language to SQL (NL-to-SQL), Natural Language to API (NL-to-API), and Natural Language to filters have evolved. Frameworks like Langchain have introduced agent-based implementations for these use cases, enabling more actionable results using LLMs. Different types of agents utilize a variety of reasoning and decision-making steps using fundamental blocks of an agent, such as toolkits, tools, and LLMs. In this article, we will explore the application of Natural Language Reporting, where SQLs can be created using the LLM model. An agent then executes these SQLs to yield responses in a natural language format. We will delve into two distinct types of agents, ReAct and OpenAI’s Function Calling, to better understand their underlying differences and approaches.

Let’s quickly familiarize ourselves with some key terms.

What is an Agent?

An agent is an entity that employs an LLM to facilitate the reasoning process and acts according to the model’s suggestions.

What is a Toolkit?

A toolkit refers to a collection of tools.

What is a Tool?

A tool is a function with a specific name and description, which may or may not require input arguments. It can act as invoking an API, executing a SQL query, or running a mathematical algorithm.

ReAct-Based Agent Implementation

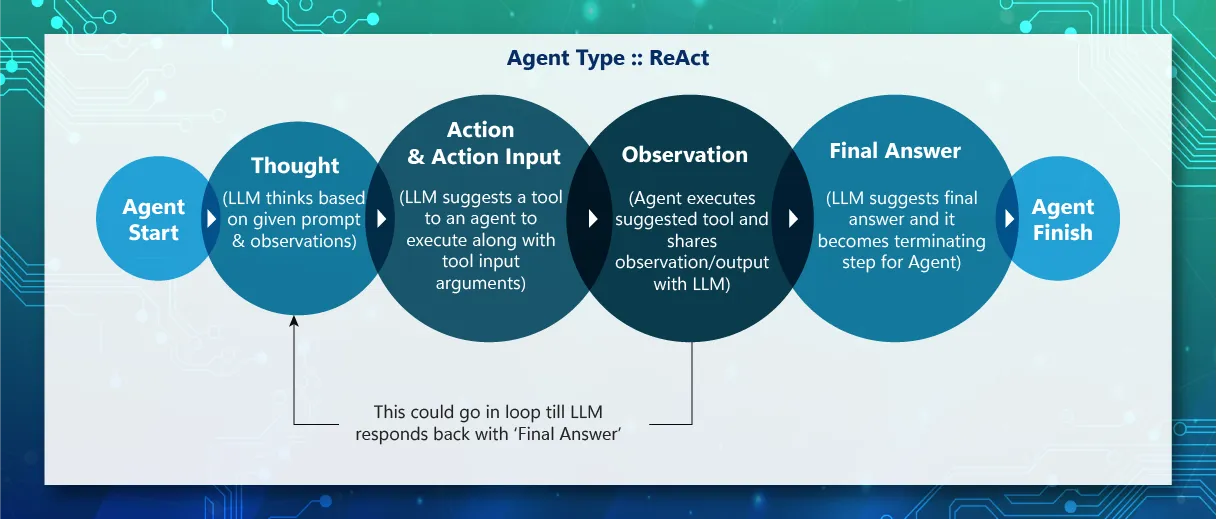

The ReAct pattern is about synergizing Reasoning and Acting in Language Models. ReAct pattern is about reasoning (logically thinking) and taking an action or sequence of actions. Agents take the help of the LLM model to perform reasoning with back and forth calls till a decision is made as to which action to take. Examples include making an API call, executing a query, etc. Essentially, agents help build a smart system that can independently work as part of a workflow and make smart decisions without humans in the loop.

ReAct Agent Processing Steps

Implementing Natural Language Reporting Agent

We will build a quick Proof of Concept (PoC) to demonstrate the operation of a ReAct-based agent. The PoC uses a dummy SQLite-based Billing database with tables such as account, bill, and usage. The following sections provide a step-by-step walkthrough of the code snippets from the PoC implementation, which employs the Langchain library.

1. Define a database connection to the SQLite database (Billing.db)

import sqlite3

from langchain.sql_database import SQLDatabase

db = SQLDatabase.from_uri("sqlite:///Billing.db")

2. Define Large Language Model to use. Here we decided to use GPT-4.

from langchain.chat_models import ChatOpenAI

llm=OpenAIChat(temperature=temperature, model='gpt-4')

3. Define the toolkit (collection of various functions).

SQLDatabaseToolkit:

- [sql_db_list_tables]: Responsible for listing tables.

- [sql_db_schema]: Capable of listing the schema of a table.

- [sql_db_query]: Executes a SQL query.

- [sql_db_query_checker]: Checks the validity of a SQL query.

from langchain.agents.agent_toolkits import SQLDatabaseToolkit

toolkit = SQLDatabaseToolkit(db=db, llm=llm)

4. Define an agent of type ZERO_SHOT_REACT_DESCRIPTION, one of the agent categories available in Langchain.

from langchain.agents.agent_types import AgentType

from langchain.agents import create_sql_agent

agent_executor = create_sql_agent(

llm=llm,

toolkit=toolkit,

agent_type=AgentType.ZERO_SHOT_REACT_DESCRIPTION,

verbose=True,

handle_parsing_errors=True,

)

5. Run the agent chain by providing the user’s query prompt. It’s important to note that the agent is run within an OpenAI callback function from Langchain to track token and cost details for generating a response to the user’s prompt.

from langchain.callbacks import get_openai_callback

with get_openai_callback() as cb:

out_response = agent_executor.run(query_prompt

Demonstration of Natural Language Reporting Agent

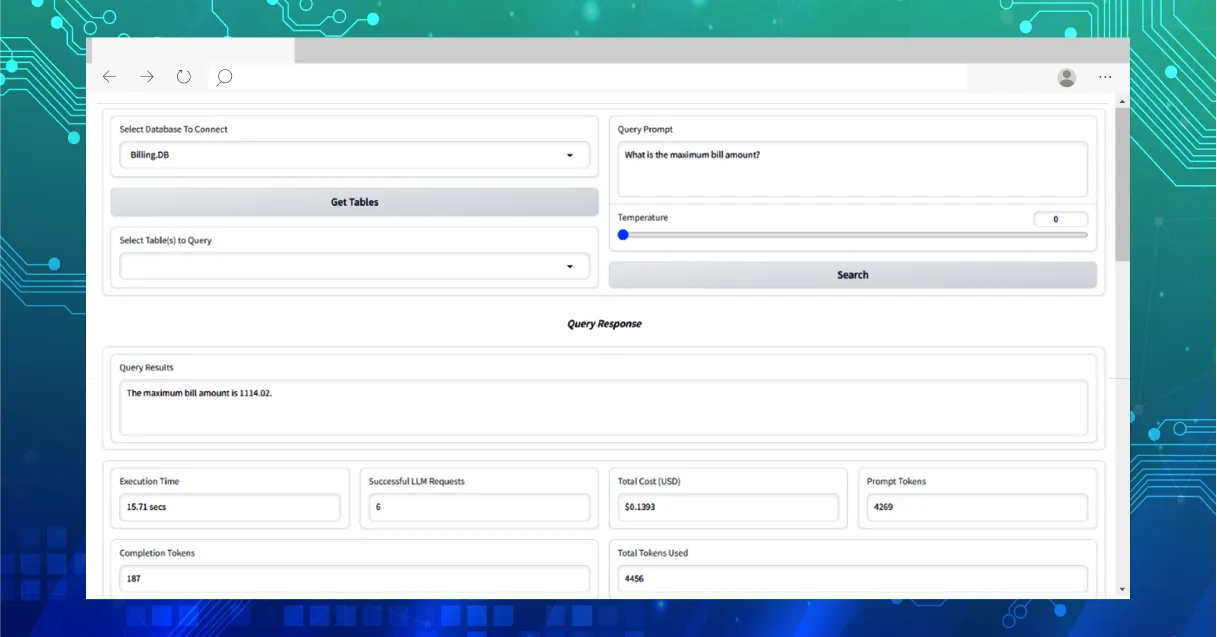

We have designed a swift UI using Gradio to facilitate the demonstration of the agent. It’s important to highlight that essential observability parameters such as execution time, the count of LLM requests, the total number of tokens, and cost specifics have been documented within this Gradio-based UI.

Log Details of Agent Interactions

The following logs clearly depict the sequence of interactions between an agent and the LLM to derive the appropriate response to a given user prompt.

Step 1: LLM Decision-Making Process

a. The LLM suggests the ‘Action’ and ‘Action Input’ during the decision-making process.

Step 2: Agent Response

a. The agent then responds by launching the recommended tool (Action).

b. The agent supplies the input parameters (Action Input) to the function.

Step 3: Observation

a. The function’s output (tool) is presented as an ‘Observation’ by the agent to the LLM.

Step 4: LLM Feedback Loop

a. Subsequently, the LLM provides a ‘Thought’.

b. The LLM suggests the next series of ‘Action’ & ‘Action Input’ for the agent.

Step 5: Repetition

a. This entire procedure repeats in a cycle until the LLM provides a ‘Final Answer’ response.

Step 6: Communication to User

a. The final response is communicated to the user.

Refer to the ReAct Agent diagram mentioned earlier for additional details.

> Entering new AgentExecutor chain...

Action: sql_db_list_tables

Action Input: ""

Observation: account, bill, usage

Thought:The 'bill' table seems to be the most relevant for this query. I should check its schema to understand its structure and the data it contains.

Action: sql_db_schema

Action Input: "bill"

Observation:

CREATE TABLE bill (

record_type VARCHAR(50),

billing_id INTEGER,

id_account_number VARCHAR(50),

timestamp INTEGER,

start_datetime INTEGER,

end_datetime INTEGER,

commodity VARCHAR(50),

charge_type VARCHAR(50),

billed_amount REAL,

billed_rate VARCHAR(50),

reading_id VARCHAR(50)

)

/*

3 rows from bill table:

record_type billing_id id_account_number timestamp start_datetime end_datetime commodity charge_type billed_amount billed_rate reading_id

Upsert 383339888485 3833695876_0698360369 20220930 20220919 20220929 E TOTAL 50.76 ABC 3833695876_0698360369_e_30330919

Upsert 579935541033 5796675630_0437900000 20220419 20220318 20220418 E OFFPEAK 0.29 ABC 5796675630_0437900000_e_30330318

Upsert 358183305836 3584113533_8633030000 20200716 20200616 20200715 E PEAK 82.45 DEF 3584113533_8633030000_e_30300616

*/

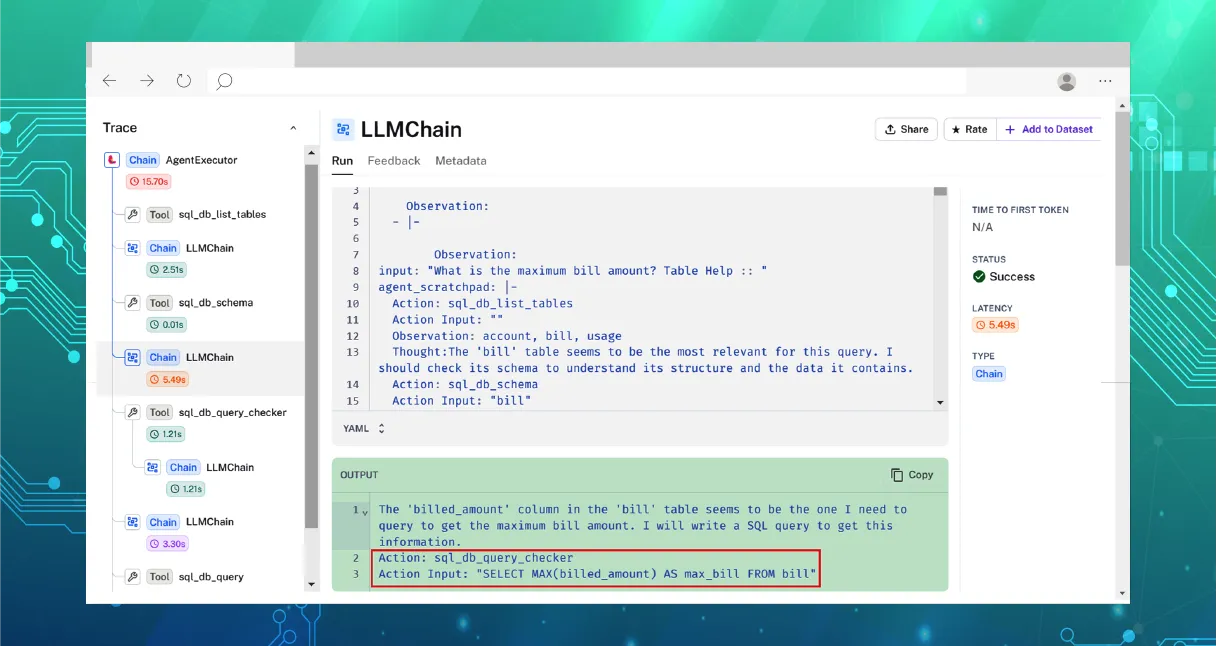

Thought:The 'billed_amount' column in the 'bill' table seems to be the one I need to query to get the maximum bill amount. I will write a SQL query to get this information.

Action: sql_db_query_checker

Action Input: "SELECT MAX(billed_amount) AS max_bill FROM bill"

Observation: SELECT MAX(billed_amount) AS max_bill FROM bill

Thought:The query is correct. Now I will execute it to get the maximum bill amount.

Action: sql_db_query

Action Input: "SELECT MAX(billed_amount) AS max_bill FROM bill"

Observation: [(1114.02,)]

Thought:I now know the final answer

Final Answer: The maximum bill amount is 1114.02.

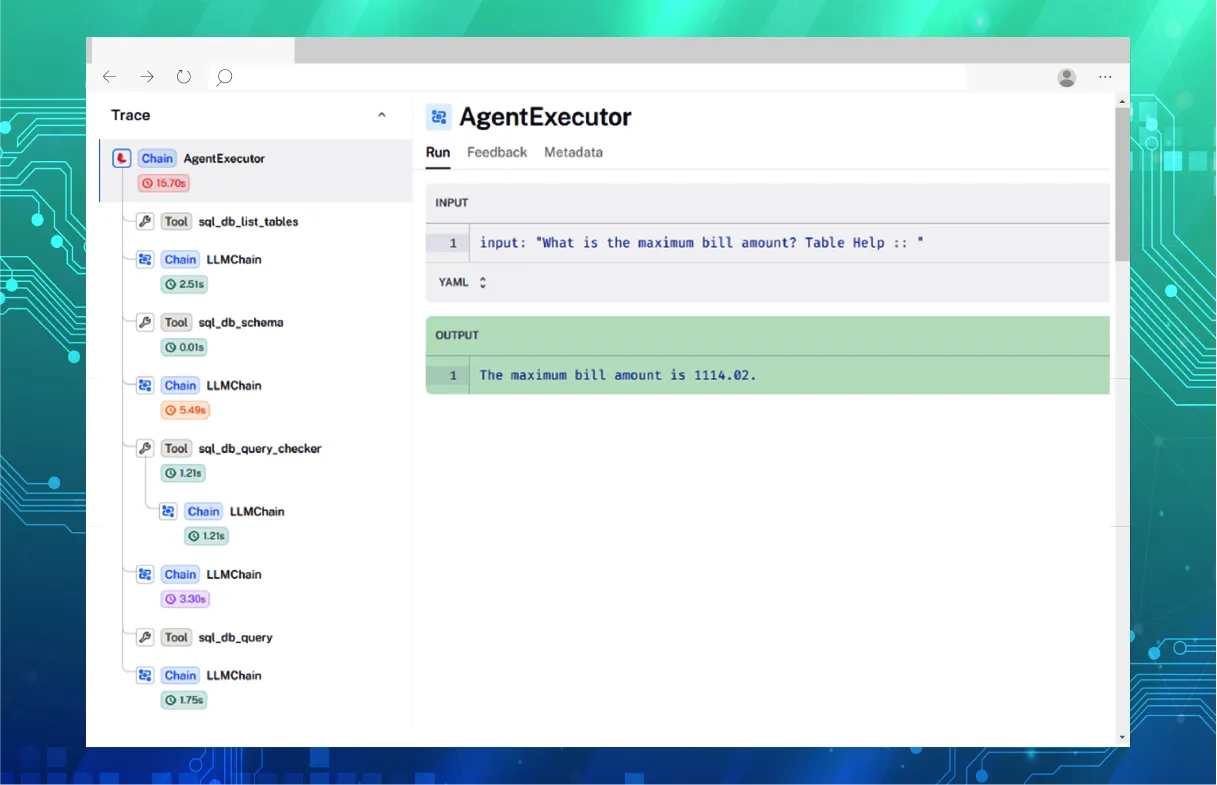

Agent’s Tracing in the Langsmith Tool

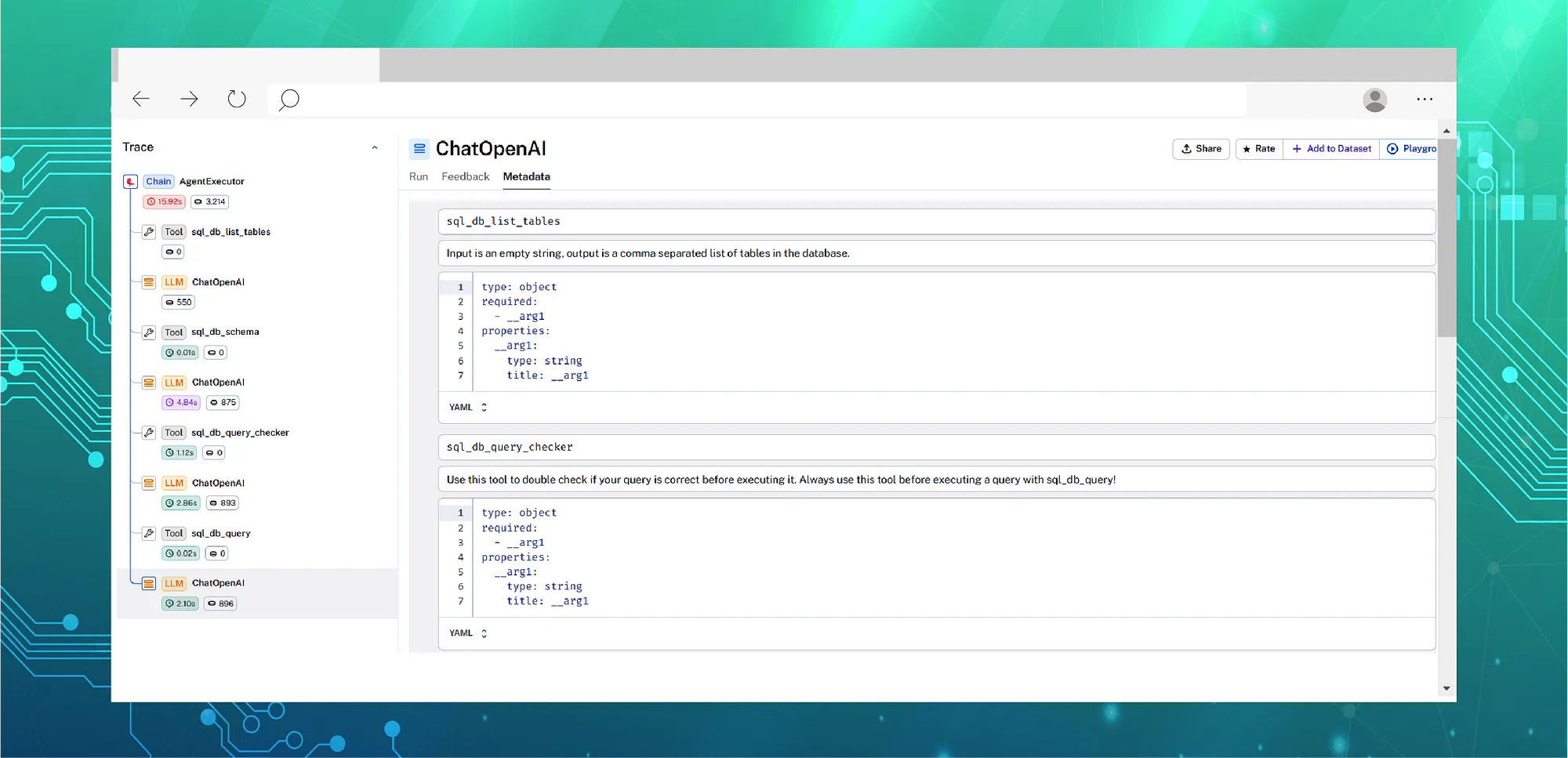

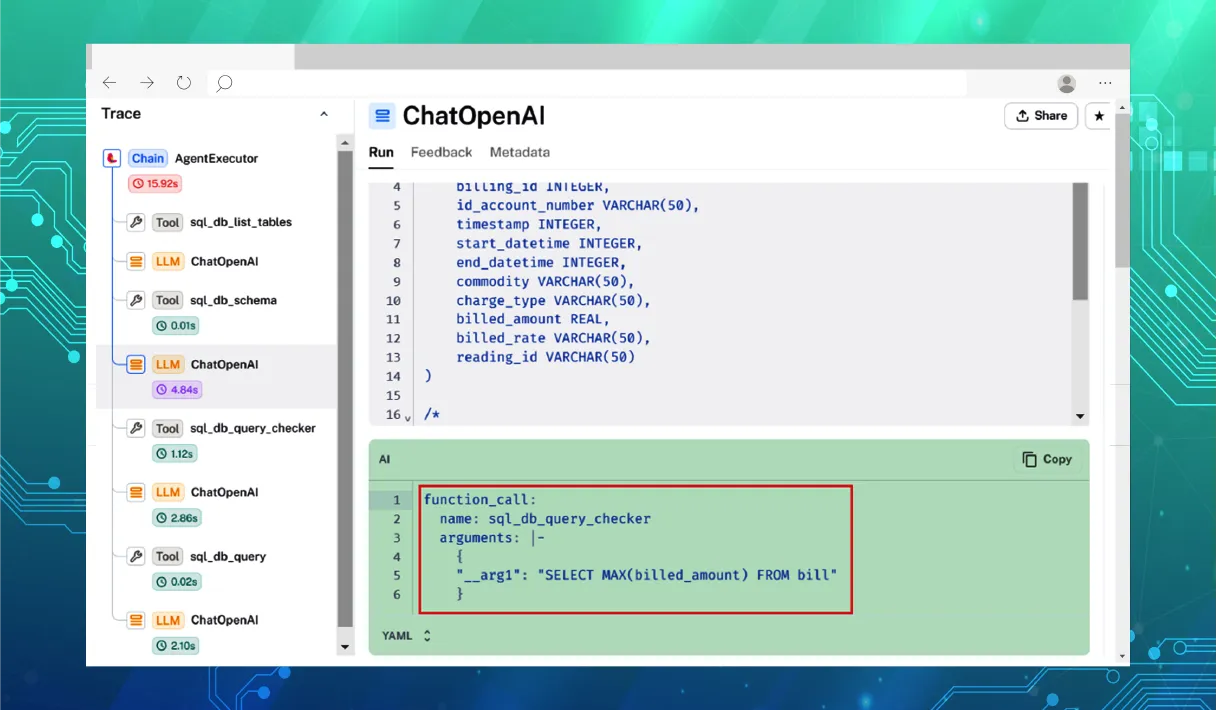

As a potent surveillance, debugging, and traceability tool from the Langchain community, Langsmith is presently in its beta stage (when this blog was written). The subsequent illustration from Langsmith depicts an agent’s multiple actions in response to a user’s prompt, along with the different tools and LLM chains that were activated during the process.

The image below illustrates a mid-stage LLM chain response for this ReAct-based agent methodology. Here, all the output is in string format, accompanied by a tool name (Action) and input parameters for the chosen tool (Action Input). The agent parses the string to extract the Action & Action Input from the LLM response. However, there’s a potential risk of failure if the LLM doesn’t produce the output as expected.

Agents Based on Function Calling

What is Function Calling?

Function calling is a feature introduced by OpenAI in which models GPT-3.5-turbo-0613+ and GPT-4+ series of models have been pre-trained to understand a pre-defined format of a function (JSON structure) as part of a user prompt and can intelligently choose to output a JSON object containing arguments to call a function (tool in an agent). Unlike ReAct, here, both input (functions) & output (function_call) from LLM perspective are well structured in a JSON-based structure and functions (part of user prompt) and function_call (part of completion tokens) have a proper placeholder in the GPT’s message structure. Below is an example of a message conversation structure illustrating this.

Further information can be found in this blog post.

"messages": [

{"role": "user", "content": "What is the weather like in Boston?"},

{"role": "assistant", "content": null, "function_call": {"name": "get_current_weather", "arguments": "{ \"location\": \"Boston, MA\"}"}},

{"role": "function", "name": "get_current_weather", "content": "{\"temperature\": "22", \"unit\": \"celsius\", \"description\": \"Sunny\"}"}

],

"functions": [

{

"name": "get_current_weather",

"description": "Get the current weather in a given location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The city and state, e.g. San Francisco, CA"

},

"unit": {

"type": "string",

"enum": ["celsius", "fahrenheit"]

}

},

"required": ["location"]

}

}

]

}

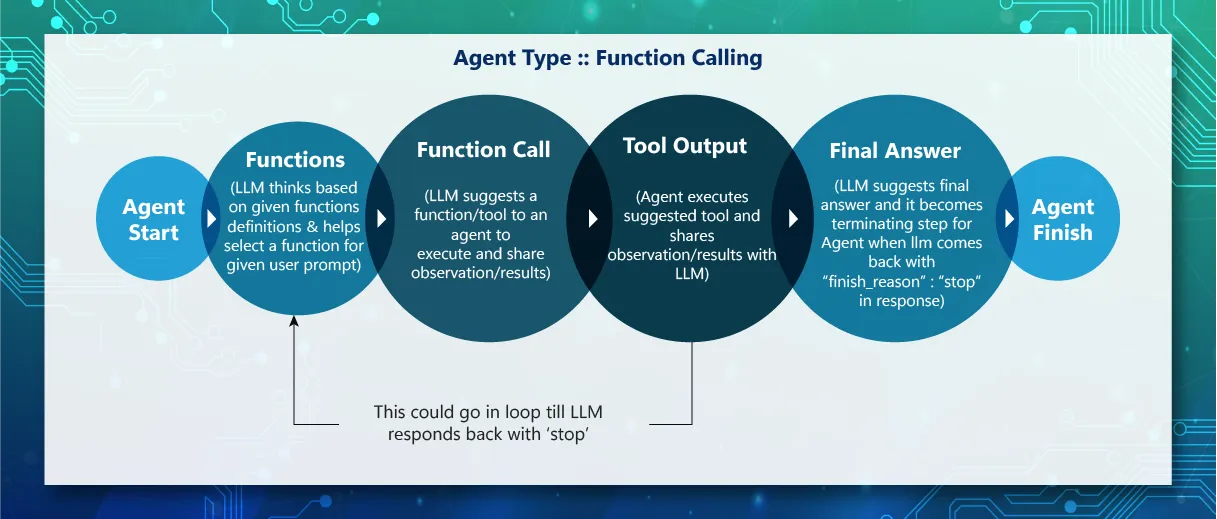

Function Calling Agent Processing Steps

Code Changes to Use Function Calling

# Creating Agent

agent_executor = create_sql_agent(

llm=llm,

toolkit=toolkit,

agent_type=AgentType.OPENAI_FUNCTIONS,

verbose=True,

handle_parsing_errors=True,

)

The following image shows all the functions (tools) definitions in a structured format, along with names, descriptions, and function parameter details sent as input to the LLM to help pick one of the functions for an agent to invoke.

> Entering new AgentExecutor chain...

Invoking: `sql_db_list_tables` with ``

account, bill, usage

Invoking: `sql_db_schema` with `bill`

CREATE TABLE bill (

record_type VARCHAR(50),

billing_id INTEGER,

id_account_number VARCHAR(50),

timestamp INTEGER,

start_datetime INTEGER,

end_datetime INTEGER,

commodity VARCHAR(50),

charge_type VARCHAR(50),

billed_amount REAL,

billed_rate VARCHAR(50),

reading_id VARCHAR(50)

)

/*

3 rows from bill table:

record_type billing_id id_account_number timestamp start_datetime end_datetime commodity charge_type billed_amount billed_rate reading_id

Upsert 383339888485 3833695876_0698360369 20220930 20220919 20220929 E TOTAL 50.76 ABC 3833695876_0698360369_e_30330919

Upsert 579935541033 5796675630_0437900000 20220419 20220318 20220418 E OFFPEAK 0.29 DEF 5796675630_0437900000_e_30330318

Upsert 358183305836 3584113533_8633030000 20200716 20200616 20200715 E PEAK 82.45 ABC 3584113533_8633030000_e_30300616

*/

Invoking: `sql_db_query_checker` with `SELECT MAX(billed_amount) FROM bill`

SELECT MAX(billed_amount) FROM bill

Invoking: `sql_db_query` with `SELECT MAX(billed_amount) FROM bill`

[(1114.02,)]The maximum bill amount is 1114.02.

Agent’s Tracing in the Langsmith Tool

Conclusion

We analyzed the implementation of two different types of agents, ReAct & Function Calling. Both are impressive strategies for automating the reasoning and decision-making process with human input from an agent’s perspective. Nevertheless, function calling offers a more streamlined approach, presenting a well-organized structure and framework that can yield deterministic results from LLM with a reduced error rate. The recent announcement of new features related to Parallel Function Calling at the OpenAI Dev Day further fuels the excitement.

Originally published on Medium - https://medium.com/@genai_cybage_software/implementing-react-and-function-calling-agent-types-for-efficient-natural-language-reporting-501f54dcce6e