MCP has moved at lightning speed from an adoption perspective since Google, Microsoft & OpenAI announced their support within their ecosystems. A big shout-out to the open-source community as well for contributing numerous MCP server implementations for engineers to try and play with, which has played a crucial role in its growing adoption too.

In this blog post, we will try to understand what MCP is, why it matters, how it works, use cases, practical examples, and current limitations/challenges.

What is MCP?

Model Context Protocol (MCP), is an open-source standard that enables AI models to interact with external data sources and tools, facilitating real-time communication and helping extend and enhance AI applications. One analogy used to describe it is like a USB-C port for AI applications — a universal connector that helps client applications talk to different MCP servers in a standardized way.

In the rapidly advancing world of AI, integration remains a persistent challenge. How can AI applications effectively communicate with the tools and data sources that drive real-world utility? The answer lies in this new open standard.

At Cybage, we’re not just observing this evolution, we’re actively shaping it. With deep expertise in implementing MCP, we help enterprises streamline AI development, reduce integration overhead, and unlock the true value of their AI investments.

Why MCP?

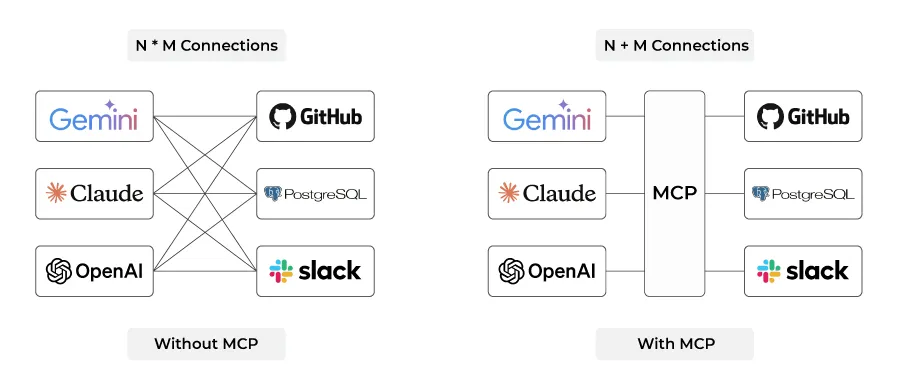

Tools are now a fundamental core component for GenAI-based application development. But imagine if everyone started building their own tools — everyone would have their own set of tools with their own standards, making it hard to integrate with common frequently used tooling like GitHub, GitLab, Slack Communications, Emails, Jira and many more. Anthropic introduced MCP to solve this problem.

Streamlining AI Tool Integration With MCP

To appreciate MCP’s significance, we should understand how it fits alongside other standardization efforts:

- APIs: Standardize how web applications interact with backend systems (servers, databases, services)

- Language Server Protocol (LSP): Standardizes how IDEs interact with language-specific tools for features like code navigation, analysis, and intelligence

- MCP: Standardizes how AI applications interact with external systems, providing access to prompts, tools, and resources

MCP bridges the gap between intelligent AI systems and the tools/data sources that make them truly useful in real-world applications. It creates a common language that allows AI applications to request and receive information from various external systems without requiring custom integration for each one.

Key Benefits of MCP

For different stakeholders in the AI ecosystem, MCP offers specific advantages:

- For AI application developers: Connect your app to any MCP server with zero additional work

- For tool or API developers: Build an MCP server once, see adoption everywhere

- For end users: Access more powerful and context-rich AI applications

- For enterprises: Maintain clear separation of concerns between AI product teams

By standardizing these interactions, MCP dramatically reduces integration costs and accelerates AI deployment across organizations.

How Does MCP Work?

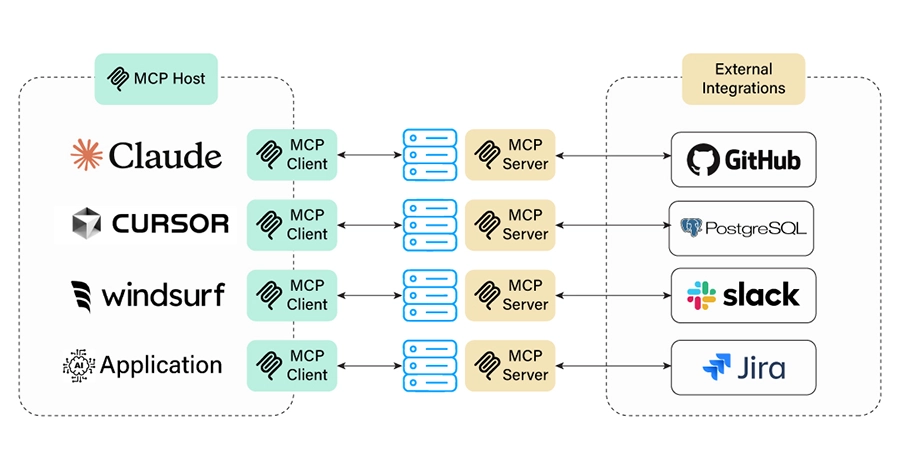

MCP Enables Seamless Connectivity Between AI Applications and External Tools Like GitHub, PostgreSQL, Slack, and Jira — Simplifying Integration and Accelerating Development

MCP follows a client-server architecture where AI applications (clients) communicate with tools and data sources (servers) through a standardized protocol. The following are the high-level key components of MCP Architecture:

- MCP Host: The AI application that needs data or tools

- MCP Client: The component within the AI application that communicates with MCP servers

- MCP Server: The middleman that connects the AI model to an external system

MCP Architecture: Core Components

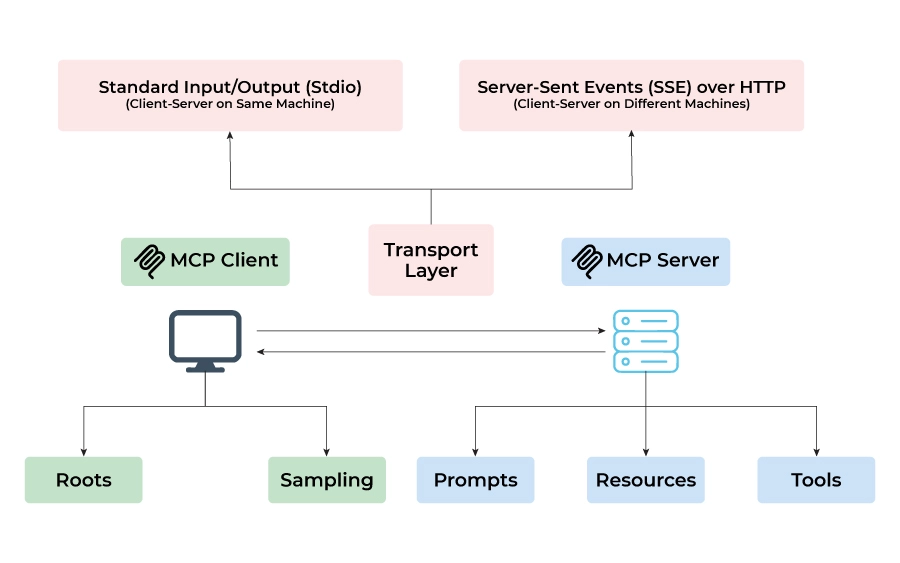

MCP Architecture Enables Flexible Communication via STDIO or SSE, Unlocking Access to Roots, Prompts, Tools, and More

MCP Client Components

Roots

Roots are a concept in MCP that define the boundaries where servers can operate. They provide a way for clients to inform servers about relevant resources and their locations.

Examples

file:///home/user/projects/myapp

https://api.example.com/v1

Sampling

Sampling capabilities for data analysis and processing.

MCP Server Components

Prompts

Prompts enable servers to define reusable prompt templates that clients can easily surface to users and LLMs.

Resources

Resources represent any kind of data (text or binary) that an MCP server wants to make available to clients. This can include:

- File contents

- Database records

- Screenshots and images, and more

Examples

file:///home/user/documents/report.pdf

postgres://database/customers/schema

screen://localhost/display1

Tools

Tools enable servers to expose executable functionality to clients. Through tools, LLMs can interact with external systems, perform computations, and take actions in the real world.

Transport Layer

MCP supports multiple transport mechanisms for client-server communication:

Server-Side Events (SSE)

- Enables server-to-client streaming with HTTP POST requests for client-to-server communication

- Ideal when only server-to-client streaming is needed

- Works well with restricted networks

- Suitable for implementing simple updates

This flexibility in transport mechanisms allows MCP to work in various network environments and use cases.

Relevant Use Cases

Cursor & Windsurf IDEs already have support for integrating with MCP servers. There are several use cases from an IDE perspective that could help engineers achieve their day-to-day work through a unified chat interface and connect to the entire ecosystem using natural language. Of course, the MCP server-based tools ecosystem must evolve & mature, and usability needs to improve for true adoption. Here are examples of MCP Servers useful from an engineer’s/developer’s perspective:

- Local Filesystem Handling

- GitLab Handling

- GitHub Handling

- Slack/Teams Messaging

- Email Integration

- JIRA Integration

- DevOps Tools Integration

- Database Integration

- Web Search

- Stack Overflow Search Tool

- API Docs Integration as a Knowledge Retrieval

- Standard guidelines/best practices from coding perspective

- Latest Packages/Library Docs

- Enterprise Knowledge Repositories

- And many more…

MCP Server References

Many MCP server hubs have emerged, and Anthropic has also open-sourced several MCP servers for reference. Here are important links for various MCP server hubs available:

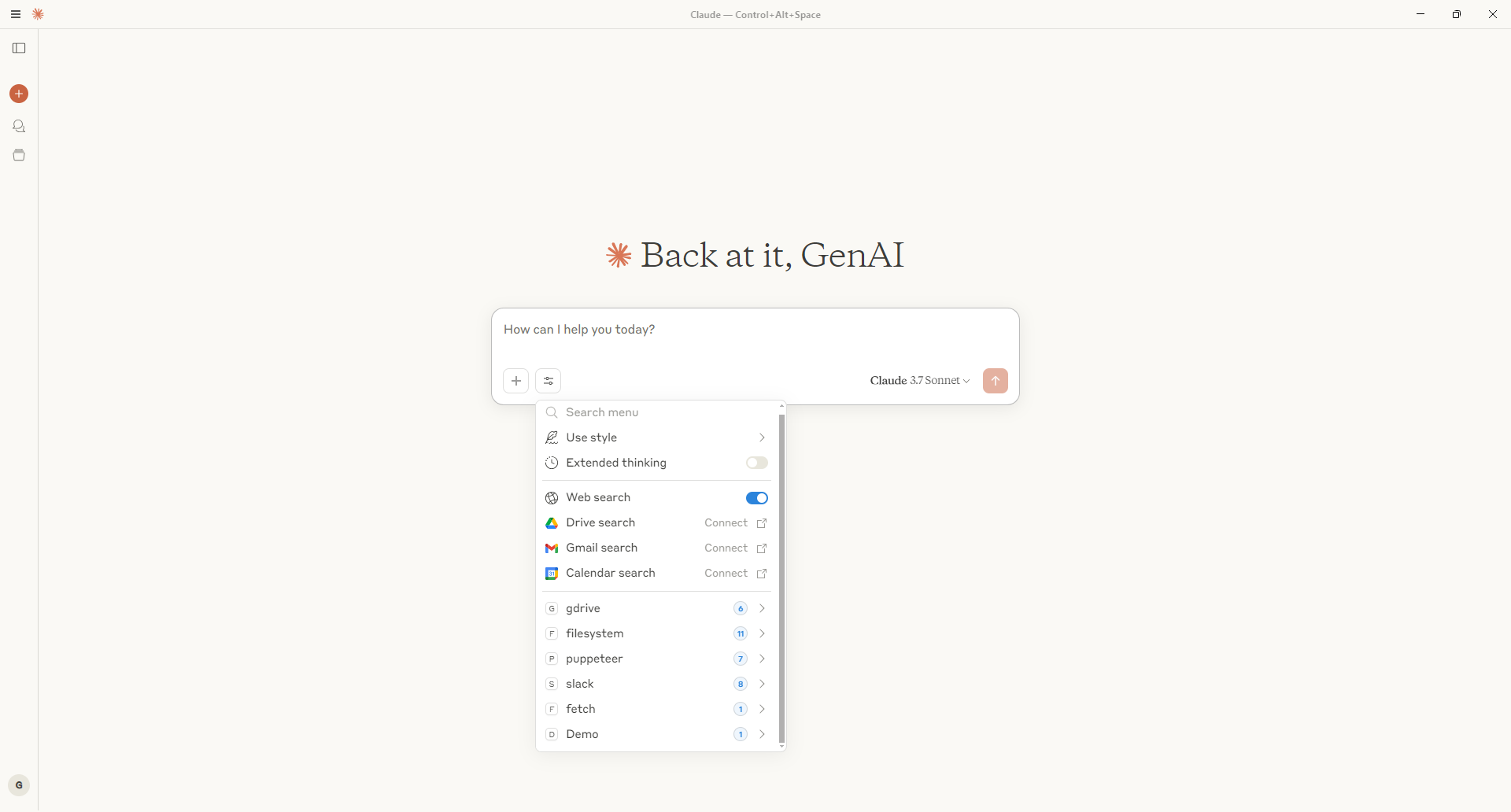

Claude Desktop MCP Configuration

Configuration file shows different MCP servers configured within the Claude Desktop Application.

Go to File > Settings > Developer to find all the servers configured & running within the Claude Desktop App.

Active MCP Server Configuration in Claude Using SSE for Real-Time Data Access

# File is located at the following location for Windows machines:

C:\Users\<user_dir>\AppData\Roaming\Claude\claude_desktop_config.json

{

"mcpServers": {

"gdrive": {

"command": "python",

"args": [

"C:\\Users\\<user_dir>\\gmail\\gmail_server.py",

"--creds-file-path",

"C:\\Users\\<user_dir>\\mcp\\gmail\\creds\\.google\\client_creds.json",

"--token-path",

"C:\\Users\\<user_dir>\\mcp\\gmail\\creds\\.google\\app_tokens.json"

]

},

"fetch": {

"command": "uvx",

"args": [

"mcp-server-fetch"

]

},

"filesystem": {

"command": "npx",

"args": [

"-y",

"@modelcontextprotocol/server-filesystem",

"C:\\Users\\abc "

]

},

"puppeteer": {

"command": "npx",

"args": [

"-y",

"@modelcontextprotocol/server-puppeteer"

]

},

"slack": {

"command": "npx",

"args": [

"-y",

"@modelcontextprotocol/server-slack"

],

"env": {

"SLACK_BOT_TOKEN": "xoxb-8888882222222-8888882222222-4abcdefghijklmnoPQRST",

"SLACK_TEAM_ID": "T02A2AB2C2T"

}

},

"weather-sse": {

"command": "npx",

"args" : [

"mcp-remote",

"http://localhost:8080/sse"

]

}

}

}

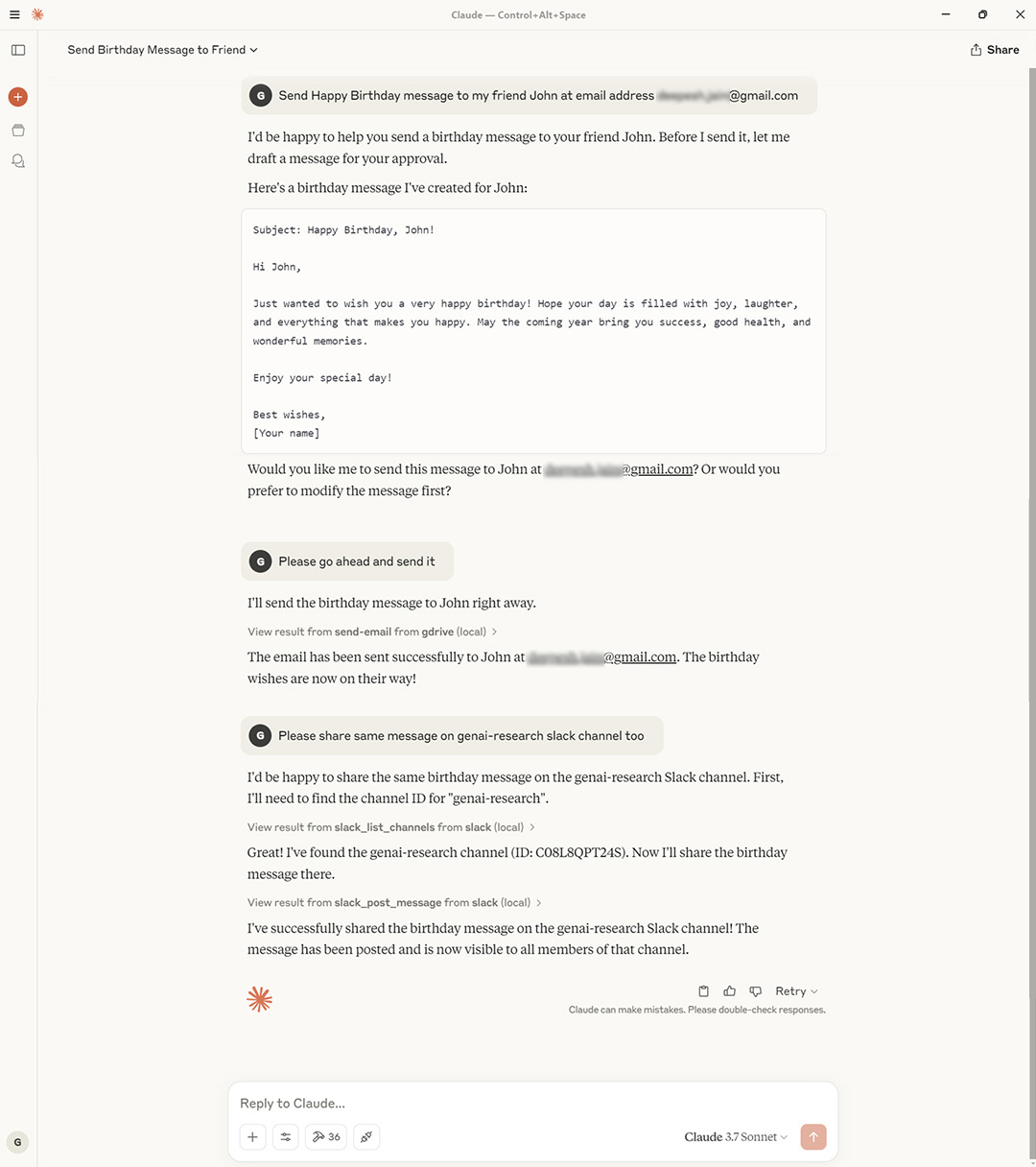

Email Preview Generated via MCP-Integrated Claude AI for Personalized Birthday Wishes

Implementing MCP: A Technical Deep Dive

Implementing MCP involves setting up both client and server components according to the protocol specifications. Here’s a guide to getting started:

STDIO-Based Local MCP Server

One can build MCP servers using SDKs provided by Anthropic in different supported languages. They support Python, TypeScript, Java, Kotlin and C# as of now. Here is a quick example of using FastMCP module from MCP Python package to build a very quick MCP server.

# FileName :: echo_fastmcp_server.py

from mcp.server.fastmcp import FastMCP

mcp = FastMCP("Echo")

def echo_resource() -> str:

"""Static echo data"""

return "Hi there. This is echo message!!!"

def echo_tool(message: str) -> str:

"""Echo a message as a tool"""

return f"Tool echo: {message}"

def echo_prompt(message: str) -> str:

"""Create an echo prompt"""

return f"Please process this message: {message}"

This could be tested using an MCP Inspector utility provided by Anthropic. To run MCP Inspector, run following command where the code is located.

# Please install mcp & uv dependency to run following command

C:\Users\abc> mcp dev echo_fastmcp_server.py

MCP Inspector Interface Demonstrating STDIO Transport and Echo Resource Interaction

STDIO-Based Local MCP Server Using Low-Level APIs

MCP SDK also provides low-level APIs to get more control over server and its core components. Here is a quick example of STDIO-based local MCP server using low-level APIs.

# FileName :: low_level_api_mcp_server.py

import asyncio

import mcp.server.stdio

import mcp.types as types

from mcp.server.lowlevel import NotificationOptions, Server

from mcp.server.models import InitializationOptions

# Create a server instance

server = Server("example-server")

async def handle_list_prompts() -> list[types.Prompt]:

return [

types.Prompt(

name="example-prompt",

description="An example prompt template",

arguments=[

types.PromptArgument(

name="arg1", description="Example argument", required=True

)

],

)

]

async def handle_get_prompt(

name: str, arguments: dict[str, str] | None

) -> types.GetPromptResult:

if name != "example-prompt":

raise ValueError(f"Unknown prompt: {name}")

return types.GetPromptResult(

description="Example prompt",

messages=[

types.PromptMessage(

role="user",

content=types.TextContent(type="text", text="Example prompt text"),

)

],

)

async def run():

async with mcp.server.stdio.stdio_server() as (read_stream, write_stream):

await server.run(

read_stream,

write_stream,

InitializationOptions(

server_name="example",

server_version="0.1.0",

capabilities=server.get_capabilities(

notification_options=NotificationOptions(),

experimental_capabilities={},

),

),

)

if __name__ == "__main__":

asyncio.run(run())

Here are details from MCP inspector tool.

MCP Inspector Interface Demonstrating STDIO Transport and Example Resource Retrieval

SSE-Based Remote MCP Server

Note: Please note that transport type SSE is getting replaced by Streamable HTTP transport type as highlighted in the latest specifications. However, they are making it backwards compatible. Implementation updates & examples are still a work-in-progress at the time of writing this blog.

Following Python code shows a quick implementation example for SSE-based Remote MCP Server which is running remotely.

# FileName :: echo_sse_mcp_server.py

import uvicorn

import argparse

from starlette.applications import Starlette

from starlette.routing import Mount, Route

from starlette.requests import Request

from mcp.server import Server

from mcp.server.fastmcp import FastMCP

from mcp.server.sse import SseServerTransport

mcp = FastMCP("Echo SSE")

def echo_resource() -> str:

"""Static echo data"""

return "Hi there. This is SSE echo message!!!"

def echo_tool(message: str) -> str:

"""Echo a message as a tool"""

return f"Tool SSE Echo: {message}"

def echo_prompt(message: str) -> str:

"""Create an echo prompt"""

return f"Please process this SSE message: {message}"

def create_starlette_app(mcp_server: Server, *, debug: bool = False) -> Starlette:

"""Create a Starlette application that can server the provied mcp server with SSE."""

sse = SseServerTransport("/messages/")

async def handle_sse(request: Request) -> None:

async with sse.connect_sse(

request.scope,

request.receive,

request._send,

) as (read_stream, write_stream):

await mcp_server.run(

read_stream,

write_stream,

mcp_server.create_initialization_options(),

)

return Starlette(

debug=debug,

routes=[

Route("/sse", endpoint=handle_sse),

Mount("/messages/", app=sse.handle_post_message),

],

)

if __name__ == "__main__":

mcp_server = mcp._mcp_server

parser = argparse.ArgumentParser(description='Run MCP SSE-based server')

parser.add_argument('--host', default='0.0.0.0', help='Host to bind to')

parser.add_argument('--port', type=int, default=8080, help='Port to listen on')

args = parser.parse_args()

# Bind SSE request handling to MCP server

starlette_app = create_starlette_app(mcp_server, debug=True)

uvicorn.run(starlette_app, host=args.host, port=args.port)

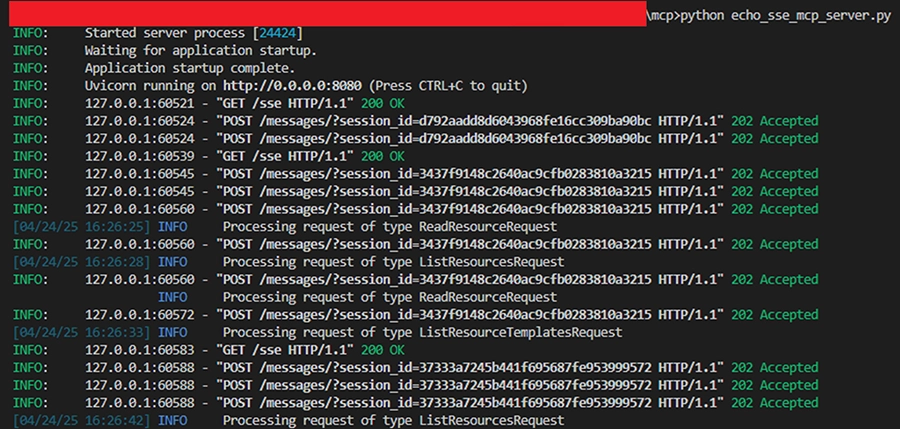

Execute following command to run the remote MCP server code in your local for quick testing.

# Please install mcp & uv dependency to run following command

C:\Users\abc>python echo_sse_mcp_server.py

Server Log Output Displaying MCP Server Handling of HTTP Requests and Resource Operations

Launch MCP Inspector with either of the following command. Select Transport Type as SSE and point it to remote MCP server url (http://localhost:8080/sse).

# Command to launch mcp inspector

C:\Users\abc>mcp dev

OR

# Command to launch mcp inspector. Would require node dependencies to be installed

C:\Users\abc>npx @modelcontextprotocol/inspector

MCP Inspector Interface Demonstrating SSE Transport and Echo Tool Execution

Best Practices for MCP Implementation

When implementing MCP, consider these best practices:

- Secure your endpoints: Implement proper authentication and authorization

- Handle errors gracefully: Provide meaningful error messages to clients

- Optimize for performance: Minimize latency in request handling

- Monitor and log: Track usage patterns and errors for troubleshooting

- Implement proper versioning: Allow for protocol evolution without breaking clients

Following these practices ensures a robust and maintainable MCP implementation.

How Cybage Can Help with Your MCP Implementation

At Cybage, we’ve developed comprehensive expertise in implementing MCP-based solutions for enterprises across various industries. Our approach combines technical excellence with strategic business understanding to deliver AI systems that provide real value.

Cybage’s MCP Implementation Methodology

We follow a structured methodology for MCP implementation:

- Discovery and Assessment: We analyze your existing systems and identify opportunities for AI integration using MCP

- Architecture Design: We design a scalable and secure MCP architecture tailored to your specific needs

- Implementation: Our technical teams build both client and server components according to MCP specifications

- Integration: We connect your AI applications to relevant tools and data sources

- Testing and Optimization: We rigorously test the implementation and optimize for performance

- Deployment and Support: We provide smooth deployment and ongoing support

This comprehensive approach ensures successful MCP implementation with minimal disruption to your existing systems.

Why Choose Cybage for MCP Implementation?

Cybage offers several advantages when it comes to implementation of MCP:

- Technical Expertise: Our teams have deep knowledge of AI systems, integration technologies, and MCP specifications

- Industry Experience: We’ve worked with clients across various industries, giving us insights into domain-specific challenges

- Scalable Solutions: Our MCP implementations are designed to grow with your business

- Enterprise-Grade Security: We prioritize data security and compliance in all our implementations

- Continuous Innovation: We stay at the forefront of AI and integration technologies

By partnering with Cybage, you gain access to not just technical implementation but strategic guidance on how to leverage MCP for maximum business value.

Future of MCP: What’s Next?

As MCP continues to evolve, we anticipate several exciting developments:

- Expanded Capabilities: New capabilities for specialized domains like healthcare, finance, and manufacturing

- Enhanced Security: More sophisticated authentication and authorization mechanisms

- Performance Optimizations: Lower latency and higher throughput for real-time applications

- Broader Adoption: More AI applications and tools implementing MCP as standard

- Community-Driven Extensions: Industry-specific extensions to the core protocol

Cybage is actively involved in these developments, ensuring our clients benefit from the latest advances in MCP technology.

Conclusion: Embracing the MCP Revolution

The Model Context Protocol represents a significant step forward in making AI systems more useful and accessible. By providing a standardized way for AI applications to communicate with external systems, MCP removes a major barrier to AI adoption and integration.

At Cybage, we’re committed to helping our clients leverage MCP to build more powerful, context-aware AI applications. Whether you’re just starting your AI journey or looking to enhance existing systems, our expertise in MCP implementation can help you achieve your goals more efficiently and effectively.

Ready to explore how MCP can transform your AI initiatives? Contact Cybage today to learn more about our MCP implementation services and how we can help you stay ahead in the rapidly evolving AI landscape.